By Dr Petra Bywood, ISCRR Senior Research Officer (retired).

We make them multiple times a day, every day, and in many different situations – at work, at home, and in public or social settings. What shall I wear to work today? Should I go to the shop before picking up the children from school? Where shall we go for dinner on Saturday?

We draw on all types of information to make decisions, often subconsciously and in a matter of seconds. Our experiences, personal preferences, lessons learned and social constraints influence each decision. For the most part, those sources of information are sufficient to solve the small problems and get us safely through the day, without disastrous consequences.

However, some problems are complex and need a more measured approach. ‘Wicked problems’ like climate change, substance abuse or suicide prevention can be difficult to define, have far-reaching impacts and the proposed solutions are hotly contested. Typically, knowledge about such complex problems is incomplete, conflicting and mutable. Therefore, strategic decisions should be informed by weighing up options based on the best available evidence. That means examining the findings from many studies to get a full picture of the problem, the potential solutions and the implications of decisions.

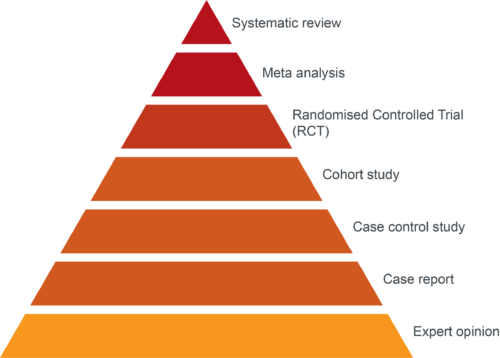

According to the internationally-accepted hierarchy of evidence, a systematic literature review or meta-analysis of randomised controlled trials is the highest level of evidence. A ‘gold standard’ systematic review identifies evidence from all the available research on a specific problem, critically examines the quality and relevance of individual studies, objectively analyses the credibility and reliability of the findings and pulls it all together to form a conclusion. The process is independent, methodical, transparent and rigorous. However, it is also time-consuming and resource-intensive.

The Evidence Review, which is also known as a Rapid Review or Rapid Response, has been developed to meet the needs of decision-makers who do not have the luxury of waiting 6-12 months for the outcomes of systematic review before taking action.1, 2 For example, when COVID-19 emerged in 2020 as a global public health crisis, the speed and volume of information quickly overwhelmed decision-makers and there was a growing imperative to make timely decisions based on the best available evidence.3

The Evidence Review, which maintains key aspects of scientific rigour and transparency of a traditional systematic review, is designed to be flexible. It focuses predominantly on practice and policy issues and can be conducted in a much shorter timeframe (6-12 weeks) than the traditional systematic review.

Each review is tailored to the needs of the decision-makers. The first critical stage of negotiation between the researchers and the end-users determines the scope and focus of the review and the timeframe for delivery. Clearly articulated and focused questions that can be answered within a specified timeframe are developed. The PICO approach, which defines the Population, Intervention, Comparator and Outcomes of interest, is useful for framing the questions and guiding the review process. This is where the researchers find out what is needed and the decision-makers find out what is possible.

The parameters of the review are defined by the research questions. This involves identifying the inclusion and exclusion criteria, the length of the search period and the type of studies that are likely to address the questions. For example, research related to effective interventions to protect healthcare workers from bullying in the workplace during the COVID pandemic will exclude bullying in other workplaces and only include research published since the beginning of the pandemic in 2020.

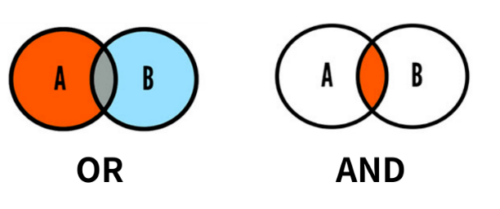

Developing an effective and efficient search strategy takes time, but the investment is well worth it. The search strategy involves the following steps:

Documenting the strategy is essential; checking the relevance of citations along the way to ensure the search is comprehensive, but targeted. Once the strategy generates relevant articles, without too much irrelevant material, run the search through each selected database and download the citations into a reference manager, such as Endnote.

Given the short timeframe (compared with a traditional systematic review), there is no time to trawl through thousands of citations. Recording different combinations of key terms means you get an idea of which terms yield the most relevant articles. If there are too many citations to manage (more than 500), some limits may be applied, such as reducing the search period and limiting articles to higher level evidence (e.g. systematic reviews, randomised controlled trials). In contrast, if there are too few articles (less than 50) to answer the research questions, searches may be expanded to include lower levels of evidence (e.g. uncontrolled studies, case reports or qualitative research) or increase the search period. Sometimes, a scoping search may reveal that there are too few published articles on a topic to undertake a useful Evidence Review and an alternative approach may be considered (e.g. Environmental Scan).

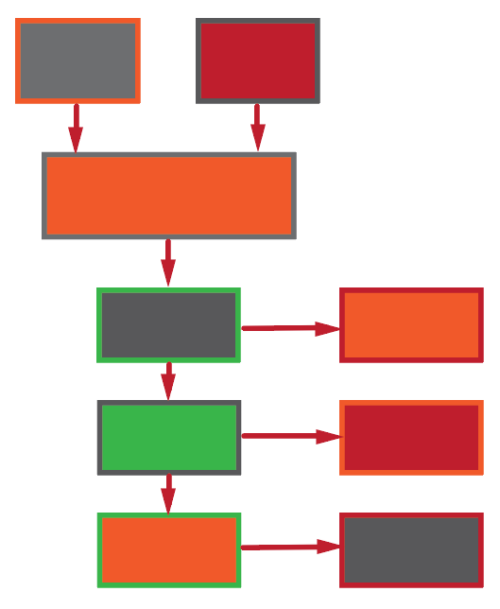

After the citations from all sources have been downloaded into Endnote, duplicates are removed and the remaining citations are screened by title and abstract according to the inclusion and exclusion criteria. The remaining full-text articles are then obtained and screened for eligibility. The process is documented in a PRISMA flow chart, recording reasons why articles were excluded. Maintaining transparency in this process means the end-user can be confident that the process was unbiased and comprehensive; and the final evidence base was the best available to answer the research questions.

A data extraction tool (e.g. Excel spreadsheet) is useful for ensuring the relevant data are extracted from the included studies. The type of data may vary depending on the focus of the review; but will usually include: citation details (author, title, year), country, study design, population of interest (age, sample size), intervention details, follow-up period, outcomes and results (statistical data, significance). Having all the data in a spreadsheet also makes it easier to transfer relevant information into tables in the report.

Ultimately, decision-makers would like to know that the evidence used as a basis for important decisions is credible and reliable. Quality appraisal covers three domains: level of evidence, quality of evidence and strength of evidence. No individual study is perfect. But studies that try to reduce bias in the way the study was conducted, analysed and reported are more trustworthy than those that fail to do so. Reducing the potential biases in a study means the results are more likely to be closer to the truth. Bias can occur at many stages of a study from recruitment to measurement, analysis and reporting.

Fortunately, there are various checklists available to help identify potential biases and other systematic errors that reduce the quality of a study. Having two independent researchers using checklists to assess the quality maintains objectivity and strengthens the process. Since research findings across studies are rarely consistent, assessing the study quality may explain why findings between similar studies differ and which ones provide the best basis for decision-making. That is, critically appraising the methods of a study distinguishes between a well-written article (good communication) and a well-conducted study (good research).

Once the systematic steps have been undertaken and documented, the most important part is to determine what it all means. This involves referring back to the aims of the review, the research questions and the needs of the end-user. The tables of extracted data form a good basis for a narrative synthesis. The similarities and differences in outcomes can be analysed to identify trends and inconsistencies; and the strengths and weaknesses in the literature are acknowledged without dwelling on them too much. Finally, the principal findings are identified and stated in clear and plain language, considering the implications for those who are likely to be impacted by decisions.

The Evidence Review is a valid approach that strikes a good balance between methodological rigour and efficiency. It aims to synthesise the best available evidence to inform practice and policy, whether it is to prevent harm, identify optimal treatment or reduce administrative costs. The key strength of this approach is that it can be tailored to the needs of the end-users, not only in the focus and scope, but also in the way the findings are presented – clearly and succinctly, but underpinned by a robust and transparent process.

Petra Bywood

This article was written by Petra Bywood, ISCRR Senior Research Officer (retired). If you’ve found this article useful, please let us know via social media: Twitter or LinkedIn